AI Shouldn't Make You Anxious

How The Rest of Us Can Succeed in Today's AI Empowered World

Welcome to Outsmart the Learning Curve! Fresh self-improvement ideas supported with accessible research. Book now available.

Is AI Causing the Sky to Fall?

Every week I meet with students and young professionals seeking career guidance, and lately our conversations inevitably turn to AI. The anxiety is palpable and understandable—hardly a day goes by without headlines about AI disrupting another industry or profession. But beneath the fear lies a more complex and ultimately more hopeful story.

Many of the worst scenarios assume that AI will continue to improve at an exponential pace until it gets to a magical point referred to as Artificial General Intelligence (AGI), when AI systems can match or exceed human-level performance. There’s a lot of debate over if and when we might reach AGI, but the most breathless optimists see it coming as soon as 2025!

But hold on. Let’s take a breath.

AI Progress May Be Plateauing

Maybe it’s a bandwagon thing or the echo chamber, but several analysts and news outlets ran stories in the last few weeks (Benedict Evans, Axios, The Independent) describing how the latest versions of the big AI models from OpenAI, Anthropic, and Google are not making the huge leaps they were just a year ago.

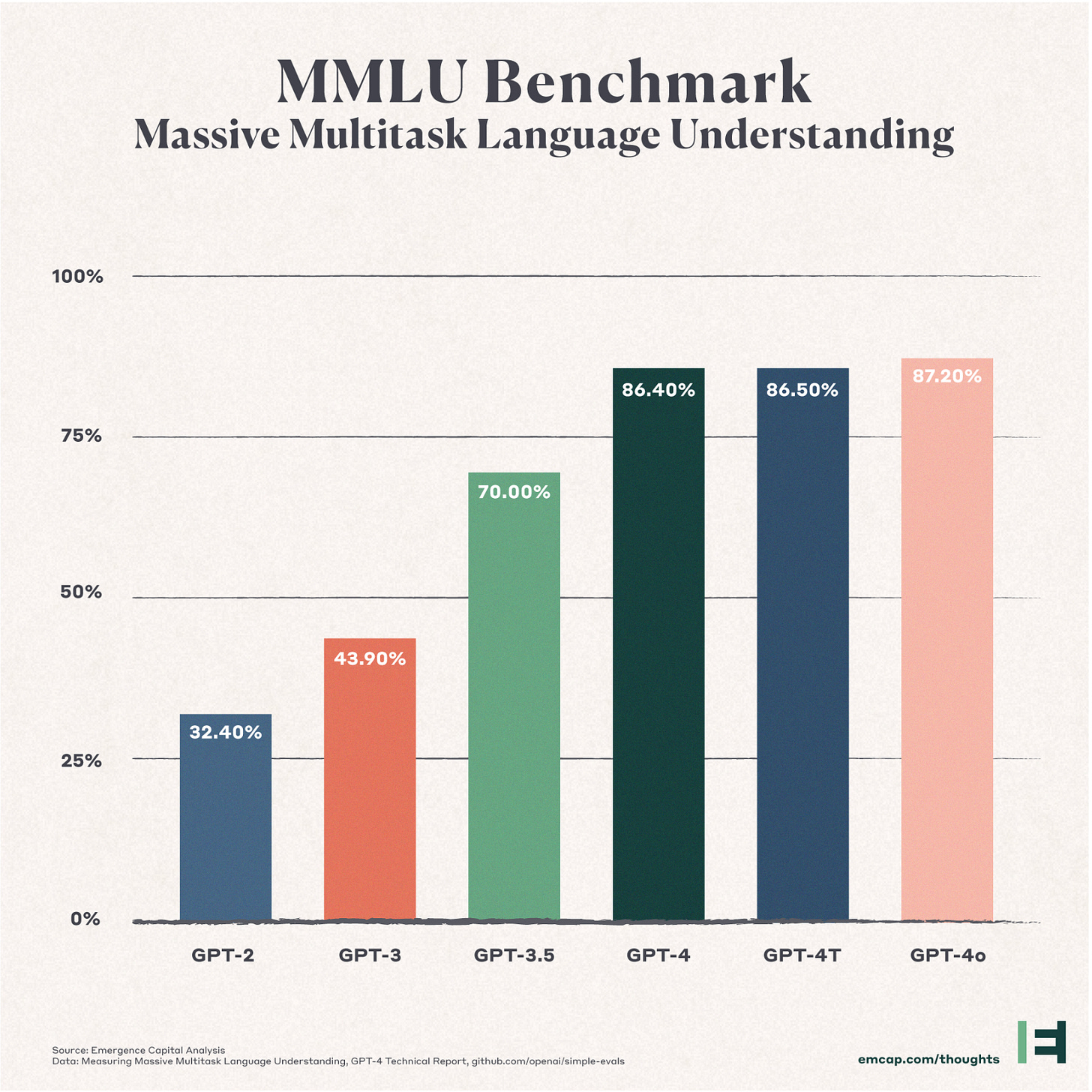

For example, the graphic below illustrates little improvement on language understanding for OpenAI’s GPT models over the last three iterations.

Whether this is a temporary blip or perhaps the end of dramatic improvements for a while is unclear. But the evidence is pretty compelling that AI may be hitting a wall.

Why Could AI Be Hitting a Wall?

Jeff Hawkins1 has spent the last 20 years studying intelligence at Numenta, his brain-inspired AI research and technology company. Hawkins is not only predicting that the current approach to AI will indeed plateau, he’s also describing why significant improvement will hit a wall.

Hawkins outlined his arguments in a recent Fast Company article saying the neural network-based approach used to build large language model (LLM) chatbots like ChatGPT simply won’t work to achieve AGI or “real intelligence.” His main points were:

No Reality Check. Current AI models have no way to validate their output with the real world. That’s why your favorite chatbot will confidently state certain living authors have died or generate false citations to non-existent academic papers. Humans, on the other hand, can check official records or contact the authors; AI can only work with patterns in its training data.

Weak Discovery Capability. While AI can predict protein structures from existing data (as Deepmind’s app AlphaFold does), it can't tell us if there's life on Mars. That requires actually going there and looking. No amount of training data can replace physical exploration.

Language-Only Understanding. When an AI model generates a phrase like "soft cat fur," it’s just matching words. Unlike a human, who has felt cat fur and knows its texture, warmth, and how it differs from synthetic fur, current AI has no sensory experience to ground its output language.

Hawkins is not saying AGI won’t happen though. His point is that the language-based neural network path that the OpenAI, Google, and others are on will plateau, and we need to take a different approach to level up to AGI.

Hawkins's vision? Study the biology of the brain itself—the only truly intelligent object we know—and build on its fundamental principles to create intelligence. This is a distinct departure from current AI’s dependency on neural networks, which despite the name, are merely simplified mathematical approximations rather than anything close to simulations of biological processes.

Hawkins has spent the better part of his life pursuing this vision. But even if his path to AGI is correct, it’s not clear how long it will take to get there.

Can Today’s AI Replace Human Creativity?

Just as the reports of AI plateauing and Jeff Hawkins’s article came out, I stumbled on a video of Ben Affleck speaking quite confidently and eloquently about how and why AI will never get past a “craftsman” level of artistry for film. From the clip, it’s clear that he’s studied the topic and has a definitive point of view.

While Affleck acknowledges AI's impressive ability to imitate—like a craftsman learning techniques by watching others, he argues it fundamentally lacks the artistic discernment that elevates “craft” to “art.” His key observations about AI's limitations mirror Hawkins's points but from a creative industry perspective:

No Reality Check in Art. Hawkins notes AI's inability to validate its conclusions against reality. Affleck makes the same point through an artistic lens, arguing that "knowing when to stop" and exercising taste requires a kind of judgment that "currently entirely eludes AI's capability."

Weak on True Creativity. While AI can generate "excellent imitative verse," Affleck emphasizes it's just "cross pollinating things that exist. Nothing new is created." This directly parallels Hawkins's point about AI being limited to patterns in its training data, unable to discover genuinely new information.

Language Only Limits. Where Hawkins points to AI's inability to understand "soft cat fur" without sensory experience, Affleck highlights how AI fails to capture the chemistry between actors that can elevate a scene. Both are describing AI's fundamental disconnect from real-world, physical interactions.

Affleck isn't a complete AI skeptic. He sees it revolutionizing the "laborious, less creative, and more costly aspects of filmmaking," particularly in visual effects. But for core creative functions? He believes film will be "one of the last things" to be fully replaced by AI—if it ever happens at all.

But Today’s AI Is Already Changing Everything

So what if AI never gets much better than it is right now. It’s already so powerful and effective that it’s replacing or reducing the scope of many jobs. The questions I’m getting from mentees are “What should I study to prepare for an AI-enabled job opportunity?” and “What career pivot is AI-proof?”

Frankly, there are no perfect answers to these questions because AI is so new, and people are still figuring out how to incorporate it into their workflows. However, a good starting point can be derived from Hawkins and Affleck’s arguments which define a boundary or edge between where AI excels and where it fails.

While today’s AI is revolutionary, there are “edges” to its capabilities and finding those edges provide a glimpse into the opportunities for the rest of us.

So what are job opportunities given the limitations of today’s AI?

Direction Setters and Guardrails. While AI excels at execution, today it requires human guidance on what to create and, importantly, when to stop. So direction setters like creative directors, people managers, and product managers will continue to exist. In addition, those who set vision and boundaries like ethics or compliance officers will also continue to be important. Both of these role types require understanding AI capabilities and how AI meshes with business needs. AI could not have conjured the need for this article, let alone tie together timely news stories, a Fast Company article, and a relatively obscure YouTube video. I did that. While I used AI to help polish the language, the core insights and connections came from human judgment.

Relationship Builders. Yes it’s possible to create a “relationship” with AI apps like character.ai. But my sense is that humans prefer to trust other human relationships when it comes to their health, their family, and their money. So healthcare providers, physical therapists, social workers, and skilled trade workers will remain valuable because they combine human sensory feedback with expert judgment. In addition, while I see ads for AI-based sales development representatives (SDRs) for the laborious lead generation part of sales, it’ll be a long time before anyone buys a complex software solution, an expensive piece of real estate, or a company from a bot. Relationships are about trust, and right now it’s very hard to build a trusting relationship with AI.

Physical World Bridgers. The physical world's complexity demands professionals who can bridge AI's text-only understanding of the world with things only humans can touch and feel. For example, AIs alone can’t be field researchers digging for dinosaur bones, marine biologists looking for undiscovered species, or any kind of explorer who gathers real-world data. Nor can AI alone handle quality control for real-world products that you have to touch and feel.

Reality Validators. Fact-checkers and research verification specialists will be crucial as AI continues generating plausible but potentially false information. Importantly, this role will be part of nearly every knowledge-based job. With AI’s incredible ability to write and mimic expertise, a valuable skill we should all be building is sniffing out truth from fiction no matter what the source.

IMPORTANT: The scope of all the above jobs will change and be enhanced by AI. In fact, the people who will succeed in the above jobs will be those using AI most effectively, and those who don’t will be left behind.

AI Is the Great Equalizer

I recommend to nearly everyone I talk to that they should be learning, embracing, and testing the edges of AI as their side gig. That’s what I’m doing. Even if today’s AI has reached its functional limit, its current ability to prompt and query a huge percentage of human knowledge is beyond incredible.

The good news is that AI’s power is empowering for the rest of us.

In his recent book, Co-Intelligence: Living and Working with AI, Wharton professor Ethan Mollick makes a compelling case that top writers, consultants, and students benefit less from AI than average performers.

Top writers, consultants, and students benefit less from AI

than average performers.

For example, Mollick ran a study of nearly 800 Boston Consulting Group consultants randomized into two groups: an experimental group taught how to use GPT-4 to perform 18 consulting tasks, and a control group, which performed the tasks without AI help. The performance gap between the top and bottom consultants in the control group was 22%. But for the group who used GPT-4, the gap shrunk to just 4%. Conclusion? GPT-4 brought the lowest performers to nearly the same level of output as the top performers—truly leveling the field.

Embrace AI

No, AI isn’t causing the sky to fall. But we clearly all must adapt to our new AI-enhanced world. While current AI may be plateauing a bit short of human-level intelligence, its impact is already profound. And success in this new era won't come from ignoring or hiding from AI, but from understanding the edge between its limitations and its strengths.

Bottom line, I’m not afraid of AI becoming too powerful; I worry that too few people are embracing its current capabilities while either putting their head in the sand or waiting for a fearful future that may never arrive.

What do you think?

I’m thrilled to announce that the Outsmart the Learning Curve book is now available for preorder!

The early reviews have been incredibly encouraging. Educators, therapists, career counselors, and mentors agree that the book delivers both inspiration and practical advice for a variety of readers including:

Career changers ready to reinvent themselves

Students who are unsure about their future paths

Job seekers, especially those feeling disheartened by a challenging job market

Outsmart the Learning Curve would make a thoughtful gift for anyone on these paths and will be available December 10th, 2024.

Full disclosure: Jeff and I worked together for many years at Palm and Handspring, while his life-long interest in brain research was largely on hold.

From your fingertips to God’s URL Joe. This is badly needed but I’m

still not convinced that AI “enhancement” is a thing. that’s why they call it “Artificial” for Jaysus’ sake.

Each time my “auto-incorrect” makes an unnoticed change I vow to turn it off but the fear of misspealling [sic] lingers.

A Hong Kong kolleague ( I like alliteration 🤷🏽) whose English is not great but IT skills are decided to try ChatGPT to send a 100 word email in English to his wife saying how much and why he loves her.

When she read it, she thought it was a suicide note. Only their precocious 10 year old daughter stopped her from sending EMS to his office. She read it and said “Mom that’s ChatGPT—Dad couldn’t write that!”